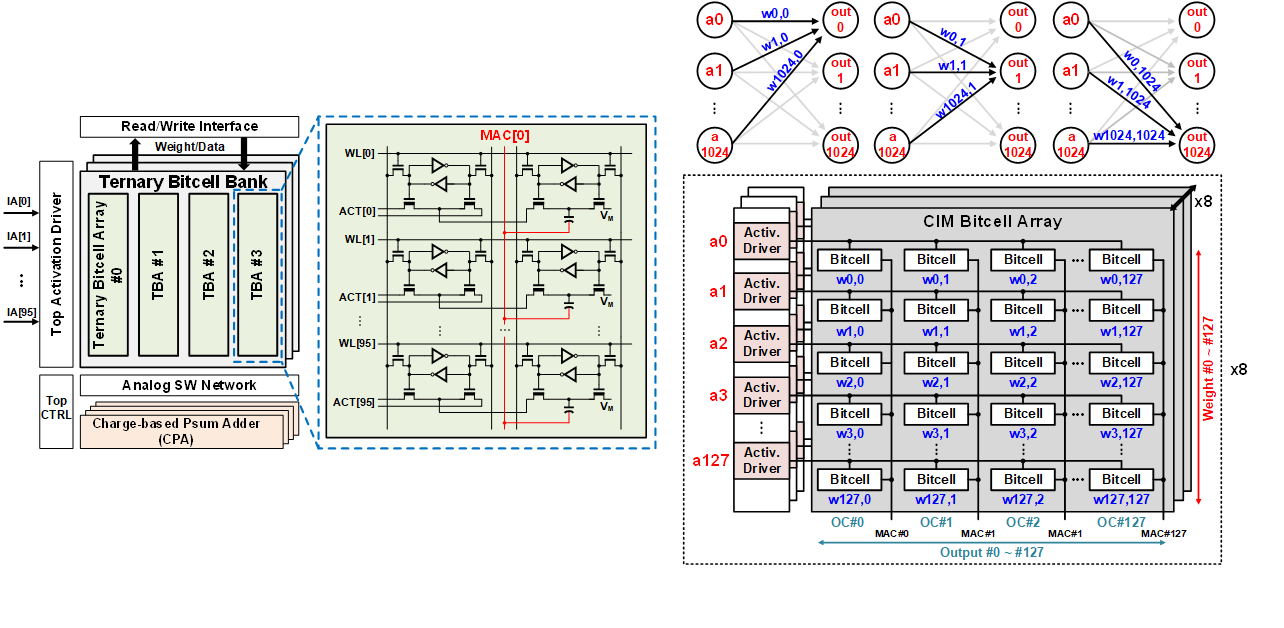

Recently, Convolutional Neural Networks (CNNs) have greatly improved Artificial Intelligence applications with their high accuracy for image and video processing. It is well known that CNNs involve large amounts of computations and memory footprint, limiting performance and energy efficiency with conventional von Neumann architecture due to the massive data transaction. Computing-in-Memory (CIM) architecture, which is a promising solution for ultra-low-power IoT devices, has been proposed to replace the conventional computer architecture by processing inside the on-chip memory to achieve high energy efficiency. Several transistors are attached near (or within) the memory bitcell to support simple computations. CIM shows a remarkable improvement in throughput and energy efficiency because it removes data fetch operation into a computation unit from memory via a data bus.

Processing-in-Memory

Computing-in-Memory